Artificial Intelligence & Machine Learning

,

Next-Generation Technologies & Secure Development

,

Video

New Compliance Tool Say Many AI Firms Fail to Meet Security, Fairness Standards

Large language models developed by Meta and Mistral AI are among a dozen artificial intelligence models that fail to meet the cybersecurity and fairness requirements of the European Union AI Act, which went into effect on Aug. 1, said developers of a new open-source AI evaluation tool.

See Also: Safeguarding Election Integrity in the Digital Age

The tool, COMPL-AI, is built on a framework that uses 27 evaluation benchmarks such as “goal hijacking and prompt leak,” “toxicity and bias” to determine if AI models comply with the EU AI Act. Among AI models tested included closed-sourced systems such as OpenAI’s GPT-4 Turbo and Claude 3 Opus, as well as open-source models Llama 2-7B Chat and Mistral-7B Instruct.

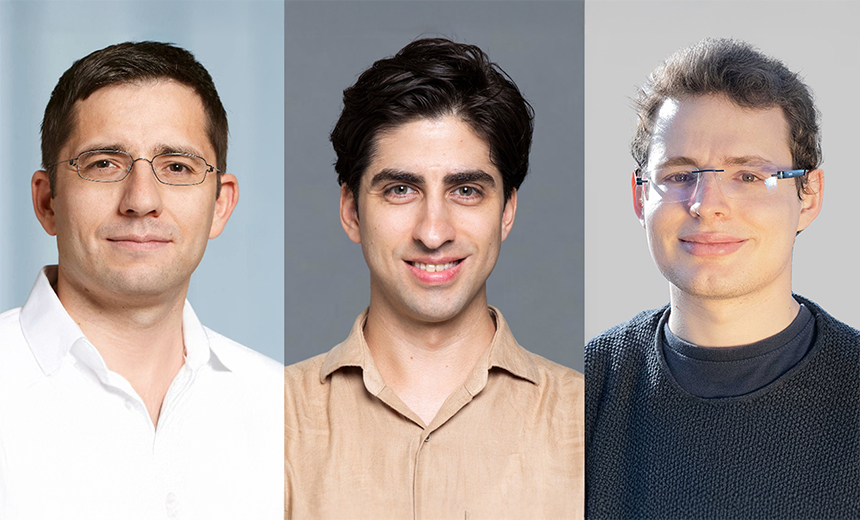

COMPL-AI – developed by ETH Zurich computer science faculty members Martin Vechev and Robin Staab, CEO of Zurich-based software developer LatticeFlow, Petar Tsankov, and the Institute for Computer Science, Artificial Intelligence and Technology in Bulgaria – found that while the models performed poorly in cybersecurity and “ensuring the absence of discrimination,” the AI applications performed well at preventing and identifying harmful and toxic content.

“AI models show diverse scores on cyberattack resilience, with many scoring below 50%,” said Vechev, a university professor and co-founder at LatticeFlow. “While Anthropic and OpenAI have successfully aligned their models to score against jailbreaks and prompt injections, open-source vendors like Mistral have put less emphasis on this, making smaller models noticeably less robust compared to larger models.”

In this video interview with Information Security Media Group, Vechev, Staab and Tsankov also discussed:

- How the tested AI models fail to meet EU AI Act cybersecurity requirements;

- The impact of AI governance on machine learning operations;

- AI market trends in the EU over the next five years.

Vechev is a computer scientist professor at the Department of Computer Science at ETH Zurich university and a co-founder of LatticFlow. His areas of focus include programming languages, machine learning and computer security.

Stankov, co-founder and CEO of LatticFlow, also serves as a senior researcher and lecturer at the Secure, Reliable and Intelligent Systems Lab at ETH Zurich.

Staab is a computer scientist and PhD student at ETH Zurich.