Agentic AI

,

Artificial Intelligence & Machine Learning

,

Next-Generation Technologies & Secure Development

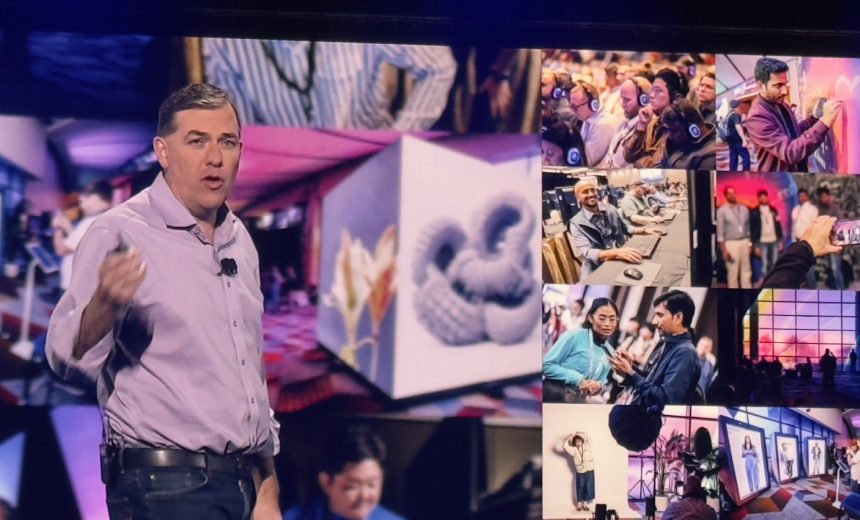

CEO Matt Garman Shares Plans for Developing Billions of Autonomous Agents

For two decades, AWS has been the undisputed leader in cloud computing, but listening to AWS CEO Matt Garman at the re:Invent 2025 conference, the future isn’t in the infrastructure layer. Garman envisions a fundamental shift from building applications in the cloud to managing a cloud of autonomous artificial intelligence agents.

See Also: Going Beyond the Copilot Pilot – A CISO’s Perspective

Garman told attendees Tuesday that “80 to 90% of enterprise AI value will come from agents,” and that will drive a fundamental architectural reset for all IT organizations. He said AWS plans to retrain its developer base to focus on orchestrating complex behaviors rather than writing monolithic code.

AWS hopes to become the foundation for a more complex “digital organism” – networks of “billions of agents” that can reason, collaborate, act and adapt across workflows that currently require armies of developers and millions of lines of code.

Cloud computing is entering its autonomous phase, and the real measure of a cloud platform will no longer be how many applications it can run but how many reliable, well-behaved agents it can support without collapsing under their collective weight, Garman told the audience at the annual conference in Las Vegas that attracts up to 70,000 users and partners.

AWS is now talking about infrastructure performance in terms of AI. Tranium 3, with its 3-nanometer architecture, and the upcoming Tranium 4 are not just incremental chip updates. They are positioned as the backbone of an inference-heavy world in which agents operate continuously rather than intermittently.

The new Ultra Servers are positioned to be the core of an industrial-scale AI factory floor. Even the longstanding NVIDIA partnership is being reinterpreted as a collaboration, implying that success in the agentic era will depend as much on system behavior day after day as on benchmark performance.

Beyond the silicon, AWS is refusing to play a single-model game. The Nova family with Lite, Pro, Sonic and Omni versions looks like a casting call for roles in a large, distributed cognitive system. Nova Lite handles the high-volume operational work. Nova Pro handles complex reasoning and Nova Sonic gives agents voices. The latest version, Nova Omni, enables agents to see, interpret and create. It is unmistakably a system design for a future cloud of agents.

But the most intriguing move is Nova Forge, AWS’ attempt to redefine who can own a frontier model. If successful, enterprises will no longer need to choose between costly model training and limited fine-tuning.

Instead, enterprises will build their own domain-specific frontiers from Nova checkpoints and proprietary datasets, then run them inside Bedrock with the same guardrails AWS applies to itself. Essentially, AWS hopes to transform the frontier model from a scarce, centralized resource into a customizable industrial tool. If this shift proves operationally viable, it could significantly change the power structure of the entire AI ecosystem.

Bedrock Agents are at the core of AWS ambitions. The company recognizes that building agents is easy. The difficult part is ensuring that thousands of them operate safely, consistently and within the boundaries set by enterprises. The new policy engine, which converts natural-language rules into enforceable constraints during runtime, reflects an understanding that governance, rather than generation, will determine the success of the agentic future. The evaluations system – essentially behavioral quality control for agents – continues this focus. AWS aims to give enterprises what they lacked in the early days of cloud: a way to trust the system at scale.

Competing for AI Platform Share

AWS’ road map, featuring AgentCore, is designed to challenge rivals’ integration-first strategies, such as Microsoft’s Agent 365 and Microsoft Entra Agent ID, positioning the autonomous agent as an extension of the Microsoft 365 user.

This approach appeals directly to CIOs who value seamless productivity and governance, aligned with their existing Microsoft security perimeter. In contrast, Google Cloud promotes an open ecosystem with Vertex AI and the Agent Development Kit, targeting organizations that emphasize advanced R&D, multimodal capabilities such as Lyria and Veo 3, and developer-driven innovation.

Around these core layers, AWS is selectively developing its own agents where it has native authority – within developer workflows, customer service systems and modernization pipelines filled with legacy code. Amazon Q, for example, aims to demonstrate what agent-centric work could look like when integrated across a company’s entire knowledge graph.

Amazon Connect’s new conversational features show how agents can serve as the front door to customer interactions. With its new AWS Transform offering, the company aims to turn decades of promises about “modernization” into something approaching automation. None of these agents are ultimate goals. They are designed to illustrate what becomes possible once the platform is truly agent-native.

Going All In on AI Agents

The final piece of the puzzle is cultural, not technical. By standardizing internally on Kuro, its agentic development environment, Amazon signals that it plans to retrain its entire developer base to think in terms of behaviors, specifications and agent orchestration rather than traditional software development. It is rare to see a company with AWS’ scale attempt such a significant internal cognitive shift. It shows how seriously AWS believes in the agentic model: It’s willing to bet the future of its engineering culture on it.

Taken together, Garman’s keynote isn’t just another product cycle moment. It is AWS showing its hand: a belief that the future of enterprise technology will be built not by writing more applications, but by constructing, coordinating and governing fleets of autonomous digital workers. And while every vendor in the industry is now speaking the language of agents, AWS is doing something different – rebuilding its stack, tools, governance model, and even internal workflow patterns around the assumption that agents, not humans, will be the primary consumers of compute in the next decade.

AWS wants to define the architecture of the post-application world, where human intent is expressed through agents, and cloud infrastructure quietly orchestrates everything behind them. In the looming battle for the latest next-generation platform, AWS is going all in.