In the Spotlight: Quality Assurance, Business Resilience, Single Points of Failure

Anyone who might have doubted the extent to which businesses run on IT systems need look no farther than how a small software flaw in CrowdStrike’s Falcon cybersecurity software has caused global disruption. Some say it might not stand as just the biggest IT disruption this year, but in history.

See Also: How to Empower IT with Immutable Data Vaults

Experts say the incident is a reminder that all businesses, whether or not they use any particular brand of security software, need to maintain robust business reliance and incident response capabilities, since unexpected IT outages can happen at any moment (see: Banks and Airlines Disrupted as Mass Outage Hits Windows PCs).

Expect publicly traded CrowdStrike to face tough questions from investors, regulators and customers over its change management and testing practices, as well as bigger-picture questions about software vendors, single points of failure and whether operating systems themselves need better defenses against misbehaving software.

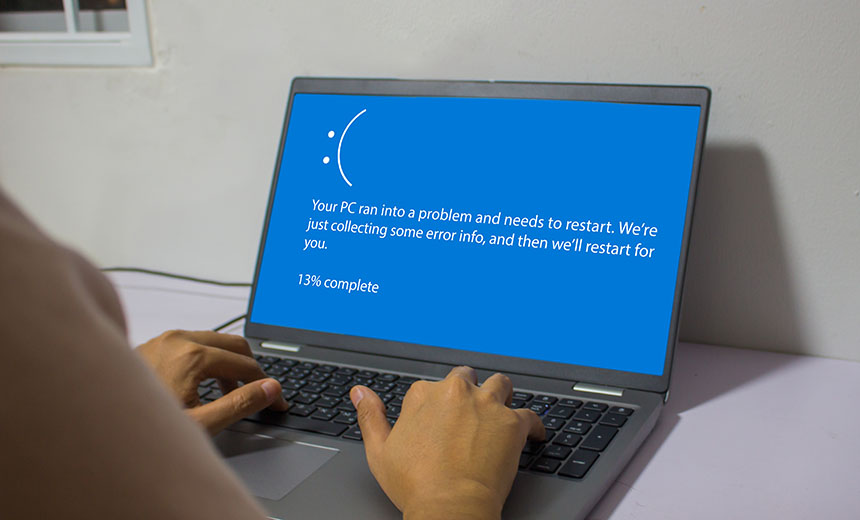

Just look at the impact of this one software flaw, which led to many Windows PCs displaying a “blue screen of death” and rebooting in an unstoppable loop. The resulting outages have led to canceled hospital procedures, planes and trains; left customers unable to access banking applications or pay in-person at some stores with credit cards; stopped major media outlets from broadcasting live news; and much more.

“I don’t think it’s too early to call it: this will be the largest IT outage in history,” said Australian cybersecurity and data breach expert Troy Hunt in a post to social platform X. “This is basically what we were all worried about with Y2K, except it’s actually happened this time.”

Even so, accidents happen, and Wall Street analysts said they expect this episode to have little or no long-term impact on CrowdStrike’s reputation or stock value.

CrowdStrike CEO George Kurtz appeared on NBC News Friday morning to apologize. “We’re deeply sorry,” he said, tracing the outage to a flaw in code delivered to some customers via the company’s automatic software updates. “That update had a software bug in it and caused an issue with the Microsoft operating system.”

“Today was not a security or cyber incident,” Kurtz said in a statement to Information Security Media Group. “Our customers remain fully protected. We understand the gravity of the situation and are deeply sorry for the inconvenience and disruption. We are working with all impacted customers to ensure that systems are back up and they can deliver the services their customers are counting on.”

CrowdStrike said it’s fixed the flaw and begun distributing the fix via automated software updates, and administrators say this has fixed some affected PCs, servers and virtual servers. The vendor has also urged all customers to keep a close eye on its support portal and liaise with company representatives.

Even so, multiple IT administrators have reported having to manually fix physical systems stuck in a rebooting loop. As a result, fixing all affected systems could take a substantial amount of time, unless CrowdStrike or perhaps Microsoft can deliver more automated ways of remediating the problem.

Numerous IT administrators say they’ve cleared their calendar to work through the weekend. Many anticipate having to go on-site to manually recovery any PCs stuck in a reboot loop, and triage remains the order of the day. “Organizations will need to prioritize the systems that are most critical to their business and recover them in order of priority,” said cybersecurity consultant Brian Honan, who heads Dublin-based BH Consulting.

Triaging the IT teams themselves may also required. “While many affected organizations will want to return to business-as-usual as soon as possible, IT staff will need support beyond the immediate hours of response – particularly as the mental and physical impact of cyber incidents is often overlooked,” said Pia Hüsch, a research fellow at Royal United Services Institute, a British defense and security think tank.

Important Safety Feature: Crash Rejection

This isn’t the first time a bad software update has led to systems crashing then rebooting in an unending loop. One oft-asked question is what a vendor does next.

Content delivery network giant Akamai faced this problem 20 years ago, when a bad metadata update, specifying how each customer’s traffic should be handled, got distributed in an update. Cue crashing – aka rolling – servers that encountered the bad update, crashed and rebooted, encountered the bad update again, and kept on crashing and rebooting, said Andy Ellis, Akamai’s CISO at the time, who served in the role for 25 years.

“We had fast rollbacks, at least, and the incident was very quickly cleaned up. But in doing safety analysis, this was a hazard that we wanted better mitigation, so we adopted crash rejection,” said Ellis, who’s now an operating partner at cybersecurity venture capital firm YL Ventures, in a post to X.

Akamai’s approach to crash rejection involved its software receiving updates and putting them in a temporary folder, testing the update to make sure it worked, and if so only then moving it out of the temporary folder and into its permanent location.

“If things didn’t go well? The application would crash, and on restart it would never notice the toxic update. It had auto-reverted, suffering only a single crash in the meantime,” Ellis said.

This approach won’t fix every type of automatic software update problem, and can be complex to code because other problems can also cause an application to crash. “But if you’re writing dynamically updatable software, crash rejection is one of the many safety practices you need to incorporate,” he said.

Beyond looking at what CrowdStrike should do, the outage also highlights how OS-level protections didn’t prevent this type of software from consigning Windows systems to an endless cycle of crash and reboot, said J.J. Guy, CEO of Sevco Security.

“That is the result of poor resiliency in the Microsoft Windows operating system,” he said in a post to LinkedIn. “Any software causing repeated failures on boot should not be automatically reloaded. We’ve got to stop crucifying CrowdStrike for one bug, when it is the OS’s behavior that is causing the repeated, systemic failures.”

Resiliency Questions

For anyone not versed in cybersecurity, the outages caused by the CrowdStrike bug, which began Thursday and appeared to peak on Friday, are a reminder of the effect any IT outage can have on businesses, partners and customers. Cue the need for robust resiliency planning inside every enterprise, regardless of what software or services they use.

“Organizations need to look at cyber risks as business risks and not simply IT risks and plan to manage them accordingly,” said Honan, who founded Ireland’s first computer emergency response team. “In particular, organizations need to design, implement and regularly test robust cyber resilience and business continuity plans not only for their own systems but also for those services and systems they rely on within their supply chain.”

Experts recommend organizations develop business resilience plans designed to deal with top threats they face, which may include unexpected IT outages due to ransomware attacks, employee error or natural disasters. Even more important, they say, is to practice these plans, not least because to be effective, they must involve many different parts of an organization – not just IT – and have a top-down mandate (see: Incident Response: Best Practices in the Age of Ransomware).

Regulatory Compliance Oversight

This shouldn’t be news to cybersecurity-savvy boards of directors. In the EU, both the Network and Information Security Directive 2, or NIS2, as well as Digital Operational Resilience Act, or DORA, require regulated organizations to take “the appropriate steps to manage cyber risk within their own organizations and just as importantly within their supply chain,” Honan said.

Cybersecurity teams have a crucial role to play for not just defending systems, but helping to get them back up and running in the event of an incident – be that an attack, error by an employee or bad software update from a vendor.

This weekend, IT teams will be burning the midnight oil to try and restore affected systems. After that, expect CIOs and CISOs to be asking what lessons need to be learned from the CrowdStrike outages.

“I can tell you: If ‘bad vendor update’ is not part of an incident response playbook, it should be on Monday,” said Ian Thornton-Trump, CISO of Cyjax.