Artificial Intelligence & Machine Learning

,

Next-Generation Technologies & Secure Development

Now-Fixed Flaw Is Big Sleep’s First Real-World Bug Find, Say Researchers

Google’s “highly experimental” artificial intelligence agent discovered a previously undetected and exploitable memory flaw in the popular open-source database engine SQLite.

The discovery of the stack buffer underflow flaw in a development version of SQLite marks the tech giant’s “first real-world vulnerability” uncovered with an AI agent outside the test sandbox, the company said. SQLite fixed the flaw in October, the day Google reported it.

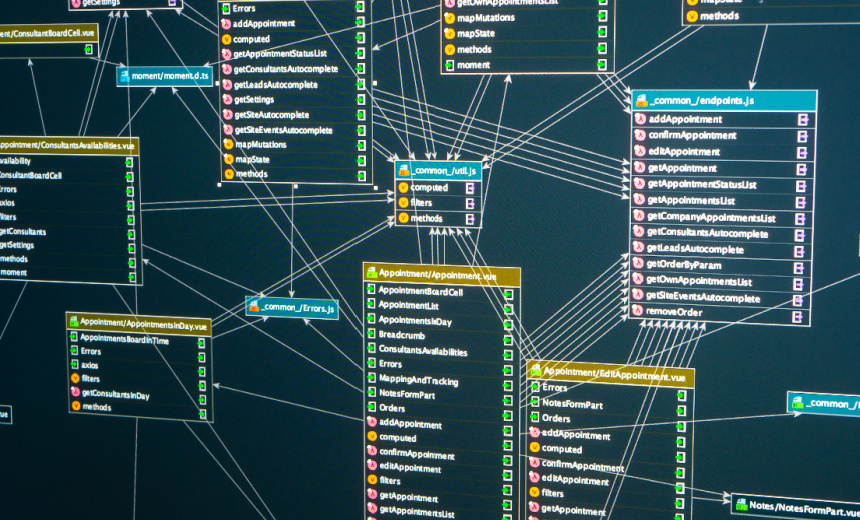

AI agents are large language models designed to automatically carry out predetermined tasks without human oversight. Google’s model – and the project it was developed under – is called Big Sleep and is run by a Project Zero-DeepMind collaboration. Big Sleep is an “evolution” of Project Zero’s Naptime, a framework Google set up in June to allow LLMs to autonomously perform basic vulnerability research. The framework was designed to help LLMs test software for potential flaws in a human-like workflow, including a code browser, debugger, reporter tool and sandbox environment for running Python scripts and recording output (see: Google’s Zero-Day Hunters Test AI for Security Research.)

In the project’s latest case, researchers instructed the Gemini 1.5 Pro-run Big Sleep to consider a previous SQLite vulnerability, asking it to look for similar flaws in newer versions of the software. They provided it with recent commit messages and diff changes, instructing it to find unresolved issues in the SQLite repository. Researchers said that Big Sleep could autonomously make connections between the previous SQLite vulnerability and other parts of the code, develop a test case to run in the sandbox, generate a root-cause analysis of the flaw in a publicly released version of SQLite and put together a report after triggering a crash with the exploitable bug. The summary of the AI agent’s findings was “almost ready to report directly.”

“We think that this work has tremendous defensive potential. Finding vulnerabilities in software before it’s even released, means that there’s no scope for attackers to compete: the vulnerabilities are fixed before attackers even have the chance to use them,” the researchers said.

The Project Zero team had previously said AI agents could replicate – and eventually outperform – the systematic methods of human security researchers, such as manual code audits and reverse engineering, and be able to “close some of the blind spots of current automated vulnerability discovery approaches, and enable automated detection of ‘unfuzzable’ vulnerabilities.”

Human researchers were unable to rediscover the SQLite vulnerability using typical fuzzing techniques even after 150 CPU hours of testing. This was likely because American Fuzzy Lop, a tool traditionally used for SQLite fuzzing, “reached a natural saturation point” beyond a certain use time. A target-specific fuzzer “would be at least as effective” as the AI agent at detecting vulnerabilities, they said.

An LLM-based reasoning system has in the past autonomously found flaws in the SQLite database engine. AI agent Atlantis had in August discovered six zero days in SQLite3 and autonomously patched one of them during the White House AI Cyber Challenge. Team Atlanta’s discovery was the Big Sleep team’s inspiration to use AI “to see if we could find a more serious vulnerability,” the researchers said (see: White House Debuts $20M Contest to Exterminate Bugs With AI).