Artificial Intelligence & Machine Learning

,

Next-Generation Technologies & Secure Development

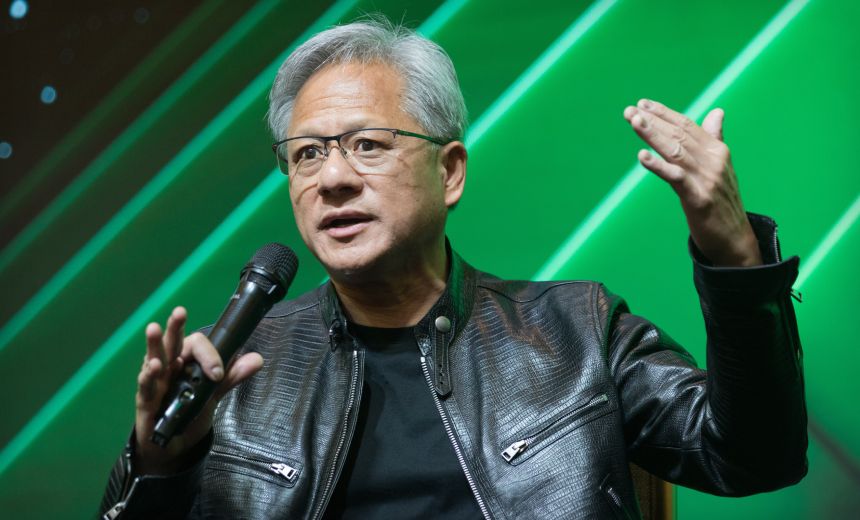

Chipmaker CEO Huang Launches Alpamayo Models, Rubin Platform

When an autonomous vehicle encounters a malfunctioning traffic light at a busy intersection, Nvidia wants it to think through the problem rather than simply react.

See Also: AI Browsers: the New Trojan Horse?

Nvidia founder and CEO Jensen Huang opened the CES 2026 trade show in Las Vegas on Monday, launching Alpamayo, an open reasoning model family for autonomous vehicle development, and Rubin, the company’s first extreme- codesign six-chip AI platform now in full production.

“The ChatGPT moment for robotics is here. Breakthroughs in physical AI – models that understand the real world, reason and plan actions – are unlocking entirely new applications,” he said, adding that “robotaxis are among the first to benefit.”

At the core of the Alpamayo family sits Alpamayo R1, which Nvidia describes as the first open reasoning vision language action model for autonomous driving. “Not only does it take sensor input and activates steering wheel, brakes and acceleration, it also reasons about what action it is about to take,” Huang said during a keynote speech.

Ali Kani, Nvidia’s vice president of automotive, said that the 10 billion-parameter model allows autonomous vehicles to solve complex edge cases without previous experience. “It does this by breaking down problems into steps, reasoning through every possibility, and then selecting the safest path,” Kani said.

The Alpamayo portfolio includes AlpaSim, an open simulation blueprint for autonomous vehicle testing, along with a dataset containing more than 1,700 hours of driving data. Developers can access Alpamayo R1’s code on Hugging Face and the AlpaSim framework on GitHub.

Mercedes Benz will be the first automaker to deploy Alpamayo in production vehicles. Huang announced that the new Mercedes Benz CLA will feature AI-defined driving built on Nvidia’s Drive platform, coming to the United States this year.

Ola Kallenius, CEO of Mercedes-Benz Group AG, sought caution in deployment. “If you’re moving an object that weighs 4,000 pounds, and it’s moving at 50 miles an hour, sorry is not going to cut it,” Kallenius reportedly said. “You don’t have to rush into the market.”

Huang said there was growing momentum behind Drive Hyperion, Nvidia’s level 4 ready platform adopted by automakers, suppliers and robotaxi providers. “Our vision is that, someday, every single car, every single truck will be autonomous, and we’re working toward that future,” Huang said.

The timeline for that vision is unclear. Nvidia’s own plans to test a robotaxi service with a partner are scheduled for 2027, while self-driving vehicles also face pushback from unions concerned about job displacement.

Paolo Pescatore, an analyst at PP Foresight, told the BBC that the announcement strengthens Nvidia’s market position. “Nvidia’s pivot toward AI at scale and AI systems as differentiators will help keep it way ahead of rivals,” the analyst told BBC.

Huang also introduced the Rubin platform, named after astronomer Vera Rubin. Succeeding Blackwell, the platform pairs next-gen GPUs with new CPUs and advanced networking to support faster, more efficient AI inference at scale. It aims to deliver AI tokens at one-tenth the cost of previous systems. “The faster you train AI models, the faster you can get the next frontier out to the world,” Huang said.

Nvidia also introduced AI-native storage with its Inference Context Memory Storage Platform, a KV cache tier that boosts long context inference with five times higher tokens per second and five times better power efficiency.

Dion Harris, Nvidia senior director for AI infrastructure, detailed the storage innovation: “As one starts to enable new types of workflows, like agentic AI or long-term tasks, there is a lot of stress and requirements on the KV cache,” Harris reportedly said. “So, we’ve introduced a new tier of storage that connects externally to the compute device.”

Huang detailed Nvidia’s open models strategy spanning six domains: Clara for healthcare, Earth-2 for climate science, Nemotron for reasoning and multimodal AI, Cosmos for robotics and simulation, GR00T for embodied intelligence and Alpamayo for autonomous driving. “These models are open to the world,” Huang said. “You can create the model, evaluate it, guardrail it and deploy it.”

The CEO demonstrated a personalized AI agent running locally on the Nvidia DGX Spark desktop supercomputer and embodied through a Reachy Mini robot using Hugging Face models. “The amazing thing is that is utterly trivial now, but yet, just a couple of years ago, that would have been impossible, absolutely unimaginable,” Huang said.

Enterprises including Palantir, ServiceNow, Snowflake, CodeRabbit, CrowdStrike, NetApp and Semantec are integrating Nvidia AI to power their products, Huang said. “Whether it’s Palantir or ServiceNow or Snowflake – and many other companies that we’re working with – the agentic system is the interface,” he said.

“Computing has been fundamentally reshaped as a result of accelerated computing, as a result of artificial intelligence,” Huang said. “What that means is some $10 trillion or so of the last decade of computing is now being modernized to this new way of doing computing.”