Google Pushes for Gen AI and Platformization to Counter Sophisticated Threats

Security is becoming increasingly complex and difficult to manage because of siloed solutions and increasingly sophisticated threats. Security practitioners face the challenge of repetitive tasks and manual resolutions while resolving security issues. The industry also continues to face a cybersecurity talent shortage. What is the bottom line? According to Google, security needs to be simple with consolidated solutions. Generative AI can help achieve this over time.

See Also: 2024 CISO Insights: Navigating the Cybersecurity Maelstrom

During his keynote address at the Google Cloud Security Summit in Mumbai last month, Abhishek A Hemrajani, director of product management for cloud security at Google, said, “Security does feel complex because there are overlapping or duplicated tools. There are gaps in the system that are not secured, and the complexity also comes from silos.”

“As a company gets bigger, there are different teams responsible for identity, platform and security monitoring. We don’t know who has what piece of that jigsaw puzzle, but somehow it all needs to come together and be optimized in this context,” he said.

He told ISMG that conventional security approaches continue to fall short in addressing the pace, velocity and complexity of threats. “Attackers are employing increasingly sophisticated techniques, leveraging zero-day vulnerabilities targeted at cloud and edge devices. All that is just making that problem [worse],” he said

Meanwhile, attackers are using AI to improvise their technique and introduce more sophisticated threat vectors. To keep up, defenders must also adopt AI.

Gen AI: Inflection Point of Security

Speaking at Google Cloud Next ’24 in April, Anton Chuvakin, senior security staff at Google Cloud, said, “We need to evolve defenses at the speed AI evolves. This applies especially to the adaptability and resilience requirements for security controls.”

“Google engineers are working to secure AI and to bring AI to security practitioners,” said Steph Hay, senior director of Gemini + UX for cloud security at Google. “Gen AI represents the inflection point of security. It is going to transform security workflows and give the defender the advantage.”

Hay outlined three macro trends for gen AI and security:

- Attacks on AI are increasing, thereby requiring secure-by-default solutions.

- Large language models aren’t always efficient or sufficient. Specialized systems and agents are required.

- Trust in gen AI hasn’t been fully established. Contextualized responses are required.

Securing AI With SAIF

Last year, Google launched the Secure AI Framework, SAIF, a conceptual framework to secure AI systems and workloads on the cloud. SAIF is designed to address top-of-mind concerns for security professionals, such as AI/machine learning model risk management, security and privacy, helping ensure that the implemented AI models are secure by default.

Google Cloud aims to deliver the safest AI platform through this framework. It also wants to evolve the security life cycle to be semi-autonomous and, eventually, autonomous using Gemini.

Convergence

Google also advocates for the convergence of security products and embedding AI into the entire security ecosystem. Through Mandiant, VirusTotal and the Google Cloud Platform, Google hopes to drive this convergence, along with safe browsing.

Google is making this convergence possible by taking a platform-centric approach through its Security Command Center, or SCC. Hemrajani said SCC is designed to unify security categories such as cloud security posture management, Kubernetes security posture management, entitlement management and threat intelligence. Security information and event management and security orchestration, automation and response also need to converge.

“SCC is bringing all of these together to be able to model the risk that you are exposed to in a holistic manner,” he said. “We also realize that there is a power of convergence between cloud risk management and security operations. We need to converge them even further and bring them together to truly benefit.”

Transforming SecOps

The volume, velocity and variety of threats have expanded manifold over the decades, and legacy SecOps is not suitable for accurately detecting such threats. First, there isn’t enough telemetry data or context to derive insights and analyze the root cause. It is unclear if the threat and the threat actor are relevant to the organization. Second, traditional security operations require security professionals such as security analysts, but this talent is scarce today.

“Our inference report says that 63% of last year’s breaches were reported by an external entity. We need to minimize that and scale without limits,” Hemrajani said. This gap can be closed through AI-enabled threat intelligence.

Applied Threat Intelligence

“The reality is that taking threat intelligence and arriving at targeted outcomes is still very difficult. Oftentimes that’s because teams are noisy; sometimes they just won’t intersect the right way or you have underwhelming outcomes. We want to solve that with our new Applied Threat Intelligence offering,” Hemrajani said.

Google Cloud believes Applied Threat Intelligence can help identify and respond to threats more proactively. It does so by continually analyzing and evaluating security telemetry against indicators of compromise, which are curated by Mandiant’s threat intelligence team.

Secret Sauce: SecLM

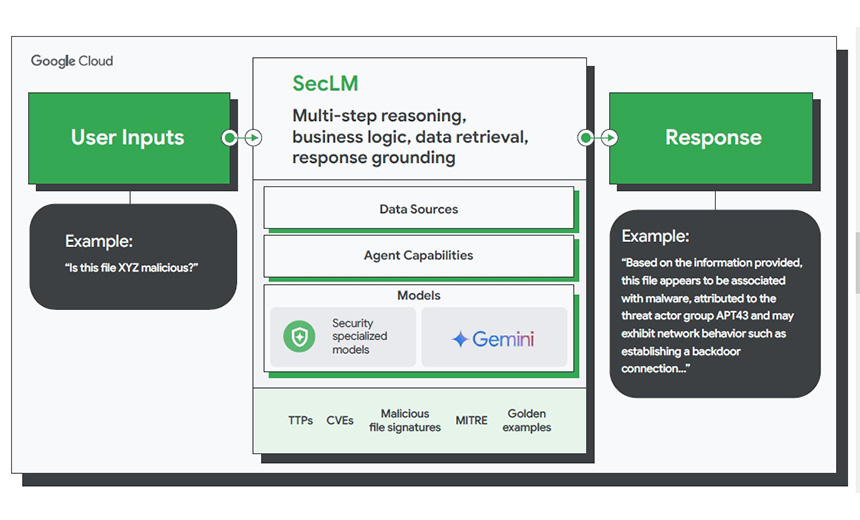

General-purpose LLMs are not capable of solving real-world security problems. Google is, therefore, infusing gen AI capabilities into its security products and platforms with SecLM, a security-specialized API. The API combines multiple models, business logic, retrieval and grounding into a cohesive system that can help solve security-related tasks with minimal input.

According to a Google whitepaper titled, “Product Vision for AI Security,” SecLM is tuned for security-specific tasks and is benefiting from the latest advances in AI from Google DeepMind as well as Google’s threat intelligence and security data. By using the Vertex AI serving platform, SecLM can offer enterprise-grade privacy, security and compliance guarantees. It does not save customer prompts and responses or use them to train Google’s models (refer to the below image).

“SecLM [part of Gemini in security] can help our clients transform their security operations by bringing security insights to gen AI solutions in an open and extensible platform. We see our SOC modernization efforts particularly benefiting from the faster time to value enabled by SecLM’s customization and orchestration capabilities,” said Upen Sachdev, principal, Deloitte & Touche.