Artificial Intelligence & Machine Learning

,

Fraud Management & Cybercrime

,

Fraud Risk Management

Experts Weigh the Advantages and Risks of Generative Adversarial Networks

Fraudsters are crafting synthetic identities with such finesse that traditional detection methods are lagging behind. Traditional fraud detection tools – both rules-based and machine learning-based – are plagued with false positives and can miss fraudulent activity.

See Also: Optimizing AppSec in the Financial Services Sector

A 60% surge in synthetic identity fraud in 2024 compelled some banks and fintechs to explore advanced artificial intelligence tools, including generative adversarial networks that simulate fraudulent transactions and help identify hidden patterns.

Generative adversarial networks model everyday transaction behaviors and generate synthetic examples of fraudulent transactions, which in turn help AI models learn and detect patterns that might otherwise go unnoticed. Early implementations indicate that adversarial AI can cut false positive rates in half.

“It is a powerful tool,” said Anurag Mohapatra, senior product manager at NICE Actimize.

Security and fraud teams at banks are seeing promise in this emerging application of generative AI, but they also must navigate steep implementation costs, complex regulatory challenges and ethical concerns over consumer data privacy.

Adversarial AI offers advanced capabilities in data generation, anomaly detection and feature learning, Mohapatra said. These networks function through a dynamic interplay between two neural networks: a generator and a discriminator. The generator analyzes historical fraud cases and produces artificial transactions that mimic genuine fraudulent behavior, while the discriminator refines its ability to distinguish between legitimate and fraudulent activities. This adversarial process sharpens detection systems by constantly challenging them to recognize increasingly nuanced fraud patterns.

Early Successes

Banks such as Swedbank and fintech pioneers including China’s Ctrip Finance have implemented generative adversarial networks. Uri Lerner, senior principal of research at Gartner, pointed out that Swedbank reduced false positives by 50%, and the bank’s investigation efficiency improved by 20%. “Their approach serves as a blueprint for financial institutions looking to modernize fraud prevention while maintaining explainability and operational efficiency,” Lerner said.

This emerging technology also improves KYC processes in anti-money laundering programs. By generating synthetic customer data for machine learning training, it helps with regulatory compliance while preserving data privacy. This capability is particularly valuable when training fraud detection models, as access to authentic customer datasets is often limited by privacy regulations. Organizations can bolster anomaly detection by analyzing typical transaction behaviors and flagging deviations that could indicate money laundering or fraud. Training datasets include rare but critical fraud cases, thereby improving the robustness of detection models.

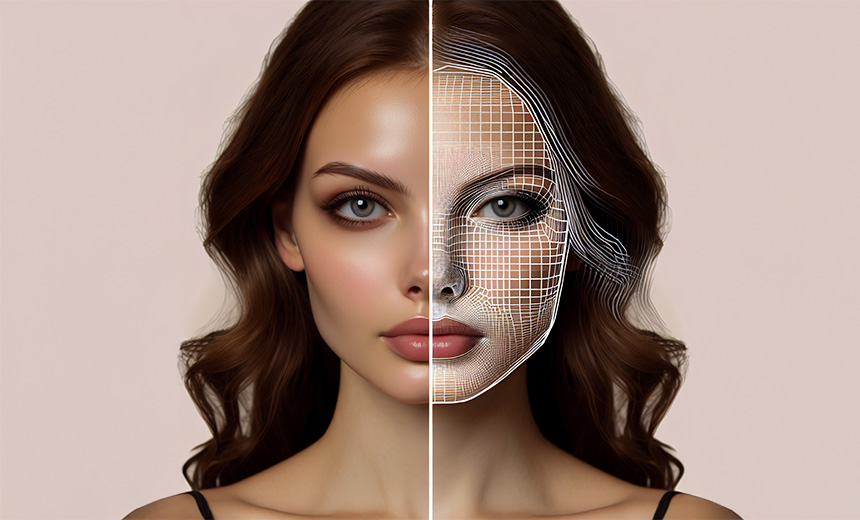

Financial institutions also use these networks for facial recognition, generating high-quality images and simulating adversarial attacks. This simulation helps refine identity verification systems, ensuring that banks are better equipped to handle sophisticated fraud. By modeling everyday transaction patterns and simulating various fraudulent techniques, the technology provides a controlled environment for testing and refining fraud detection systems before real-world threats materialize. This predictive capacity can play a crucial role in the early detection and prevention of fraud.

Challenges and Risks

Despite these advantages, organizations are facing challenges in deploying this AI technology.

Implementation requires substantial computing power and technical expertise, which may prove cost prohibitive for smaller organizations still reliant on legacy systems. “GANs may be cost prohibitive to smaller organizations, who may still have legacy systems not capable of enduring the high computing power necessary to deploy GANs,” Mohapatra said.

Since generative adversarial networks use legitimate consumer data to train models that differentiate between fraud and genuine account activity, consumer privacy is a major concern. Jennifer Pitt, senior analyst at Javelin, cautioned: “GANs also use legitimate consumer data to train the model to differentiate fraud from legitimate account activity. Because of this, consumer privacy concerns must be taken into consideration.”

Financial institutions must commit to transparency of data collection practices, clarifying what data is collected, why it is collected and how it will be used, she said.

By simulating fraudulent activities and generating synthetic data, generative adversarial networks are helping to create more accurate and robust detection systems, reducing false positives and streamlining investigations. But as the technology matures, it brings its own set of challenges, including high costs, integration hurdles and complex ethical and regulatory issues. Balancing these factors will be essential for defending against financial fraud.